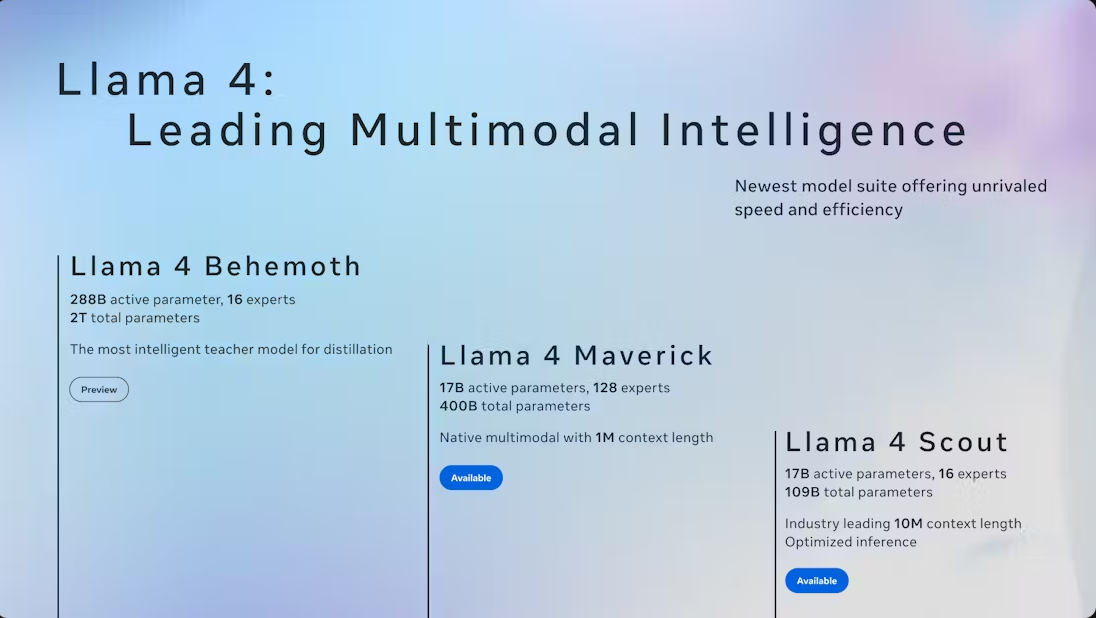

Llama 4

Natively multimodal AI models enabling text and image experiences with industry-leading performance via mixture-of-experts architecture.

The latest advancement in artificial intelligence represents a significant leap forward in multimodal capabilities, seamlessly integrating text and visual understanding into a single, cohesive framework. Built on a sophisticated mixture-of-experts architecture, this innovation sets a new benchmark for performance, offering unparalleled accuracy and versatility across diverse applications. By natively supporting both text and image inputs, it opens doors to richer, more intuitive interactions between humans and machines.

Designed with developers and creators in mind, this technology empowers users to build more dynamic and responsive AI-driven experiences. Its ability to process and interpret multiple data types simultaneously makes it a powerful tool for industries ranging from content creation to customer support. The underlying architecture ensures efficiency and scalability, making it accessible for both experimental projects and large-scale deployments.

As anticipation builds around its upcoming release, the AI community is eager to explore its potential. Early discussions highlight its promise in bridging gaps between different modes of communication, paving the way for more natural and immersive applications. With its blend of cutting-edge design and user-centric features, this innovation is poised to redefine what’s possible in the realm of artificial intelligence.